The level of Roi that an enterprise can generate from AI depends on the maturity level of process streamlining, data streamlining, automation. An organisation that is still using manual processes without digital platforms, will not see a big bang even if they have adopted AI enabled products. Rajesh Ganesan and Ramprakash Ramamoorthy at ManageEngine explain further.

As enterprises have become hybrid, just supporting monitoring and management of IT operations has become insufficient. “You need full blown observability to look at all sources from where you can gain insight. So, we are also evolving in the same way,” says Rajesh Ganesan, President ManageEngine. “You start with service delivery, followed by basic automation of your workflows, followed by managing endpoints, and then full blown observability,” he adds.

ManageEngine has now progressed into delivering services that can manage multiple threat sources, anomaly detection, user entity behaviour analytics. And that is how basic monitoring management is evolving into observability.

Today ManageEngine plays in multiple domains even within traditional IT. One of the domains is service management where ManageEngine competes in a crowded market place. In the observability market space, ManageEngine was a strong player of monitoring management on-premises and has recently extended this focus across hybrid environments and therefore supports observability capabilities today.

But Ganesan points out that where ManageEngine has a good lead is in Unified Endpoint Management and Security. “That is a domain where you do not find a lot of these players having a good game.”

ManageEngine supports Windows, Mac and Linux operating environment equally well. And is now extending this capability to any kind of browser and any kind of endpoint.

With the current availability of extended capabilities of AI through GenAI, ManageEngine is integrating these capabilities into its Unified Endpoint Management and Security to build the next generation of intelligent services. Another area, where ManageEngine is leveraging AI is in User Entity Behaviour Analytics.

“Endpoint management and security have become very critical. And that is our strong point today. That is also in most cases our entry point into enterprises and that is how I would like to see it,” says Ganesan.

Assessing the AI hype

AI is getting a lot of hype and attention today but for IT vendors like ManageEngine, this is not necessarily the same language that its enterprise customers are using. Enterprise customers are talking to ManageEngine about their pain points and their expectations from its products and solutions to deliver what they need.

“We believe the best AI is where the customers do not realize it is happening through AI. Their problem is solved and that is how we look at applying AI,” says Ganesan.

To provide enterprise services around detecting anomalies, observability, User Entity Behaviour Analytics, ManageEngine’s products will gather data and build intelligence through machine learning about a specific IT infrastructure and specific customer behaviour to build the required patterns and predictions. So, while ManageEngine is building these capabilities in its solutions by investing in AI and ML, to meet customer pain points, its market positioning is not being influenced by the integration of AI.

“Our positioning will not primarily be driven based on AI. We will still talk about UEBA, still talk about SIEM, and observability. But underneath those, it will be AI powered. That would be our stance,” explains Ganesan.

Similar to most competitive and disruptive OEMs and IT vendors across the IT industry, ManageEngine is not adding a premium or markup for integrating AI and ML capabilities into its products.

While enterprises are asking for AI enabled products and services, ManageEngine is building its own AI framework and its own AI stack. While there are still gaps to address, Ganesan feels, enterprises have just begun the usage of AI embedded products.

Integrating AI

Since the announcement of ChatGPT there has been a lot of noise and a lot of hype about AI and its capabilities. But AI is not new to ManageEngine. According to Ramprakash Ramamoorthy, Director of AI Research, ManageEngine, this vendor has been investing in AI capabilities since 2011 and has included intelligent features in its products since 2015 and again in 2019.

For ManageEngine, things are always built in-house, and this has included their own data centres. “We have built everything from scratch. We built our statistical machines for forecasting, and we never went and hired data science experts. We hired programmers, gave them some time to get up to speed with data science and AI, and that worked. The reverse did not work, which is getting data science folks and then teaching them programming to work,” points out Ramamoorthy.

In the early years of AI, there were a number of consumer use cases in the public domain, but not so many business use cases. So, initially ManageEngine did not begin by creating new use cases, but rather by augmenting its existing use cases with AI. While this approach did get good reception from its customers, it did not get the intended level of usage, according to Ramamoorthy.

One of the learning points from this early approach was the role played by adding explanations to the generated insights and predictions. By adding explanations into insights, ManageEngine began to see an increase in the confidence of its enterprise end users to apply those insights as corrections and proactive measures into their workflows.

“We saw a lot of workflows that were built around the confidence interval that we gave for our predictions,” says Ramamoorthy.

During the pandemic, the demand for ManageEngine’s security products boomed including its dynamic User and Entity Behaviour Analysis. “I would say at about six months after the onset of the pandemic, we had about 30% of our customers using AI, adds Ramamoorthy.

And then Chat GPT happened and fast forward to 2024. AI has moved from marketing conversations into sales conversations. A lot of questions that ManageEngine gets asked today are this is my AI strategy, what is ManageEngine’s AI strategy and how do I align with it.

Benefits from AI use cases

Enterprise customers have become much more serious about the usage of AI today and are expecting valid predictions built into generated insights. Support tickets are being raised by customers on how to improve the reliability of predictions offered by ManageEngine products.

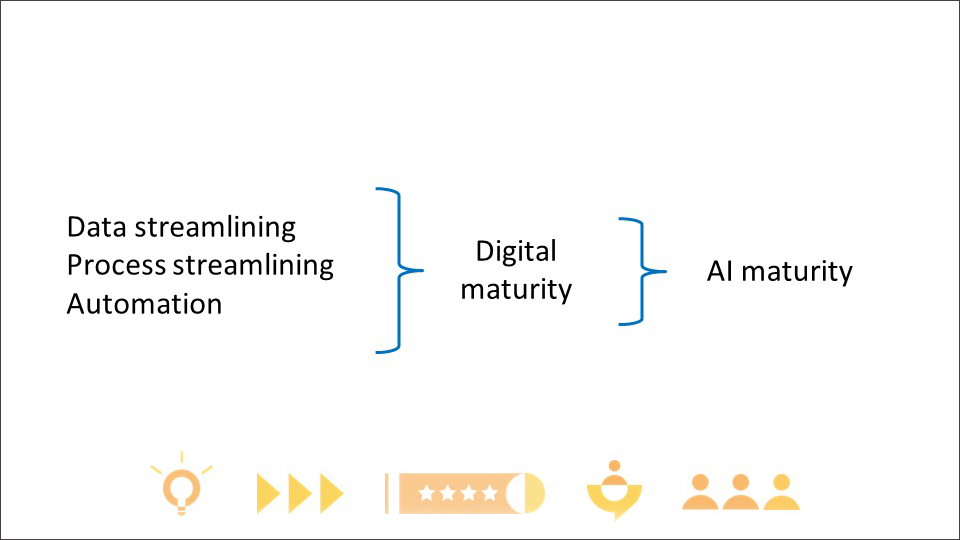

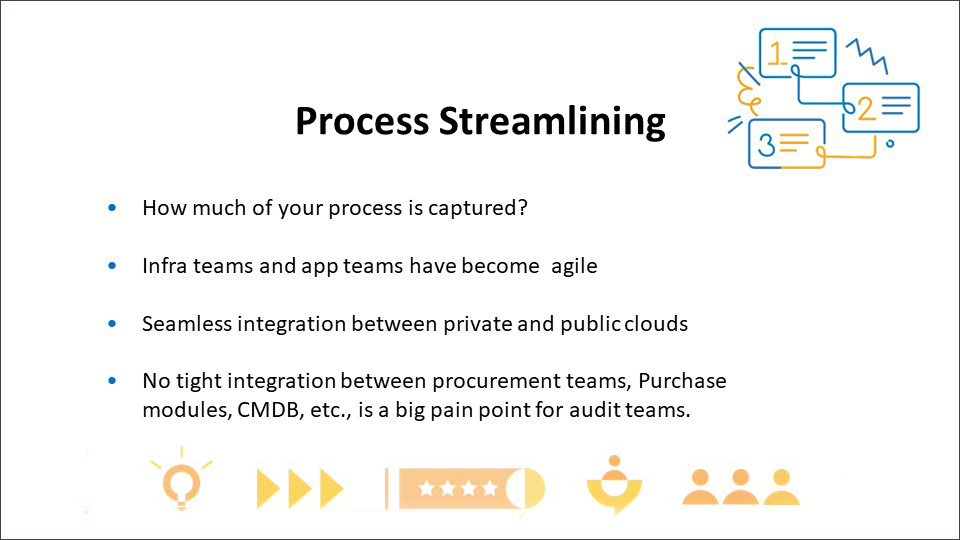

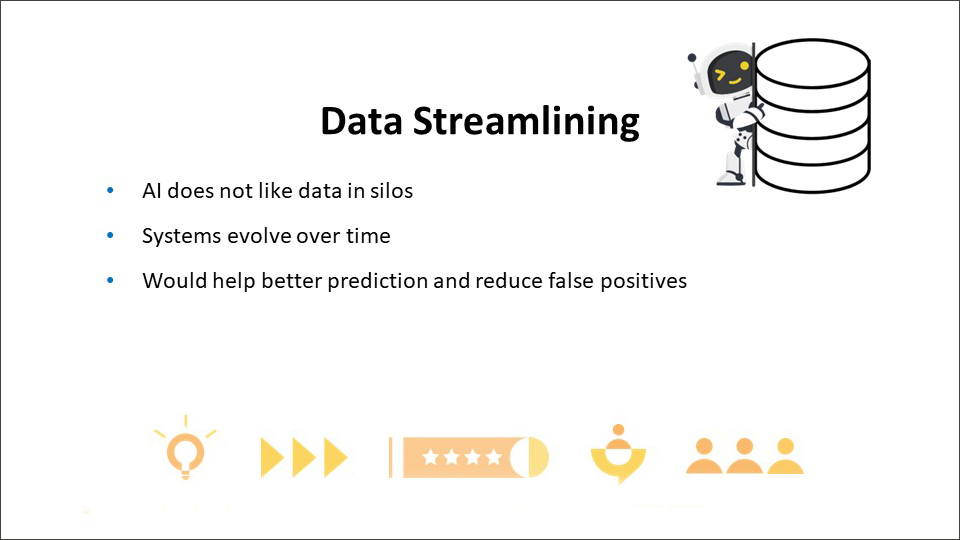

AI works best without any type of silos. For the usage of ManageEngine products the key is to have an equal window into data generated from monitoring, security, service delivery, and analytics. According to Ramamoorthy, what is required is to streamline processes, streamline data, and increase the usage of automation. “These three can be the bedrock for your AI implementation,” he says. “If you get these three pieces right, your AI is going to be more effective.”

If the AI engine does not have complete visibility into how enterprise processes are operating; if it does not have complete access to real time data generated by users, applications, networks and devices; and if automation has not been integrated, enterprises will struggle to demonstrate any meaningful benefits from AI.

The better the visibility of data for the AI-engine across the enterprise, the higher is the confidence around that specific insight. This allows AI to raise its business value from predictive to prescriptive. In other words, not just predicting an incident is going to happen, but also how to fix it.

Continuing, the level of Roi that an enterprise can generate from AI depends on the maturity level of process streamlining, data streamlining, and automation. An organisation that is still using manual processes and has not implemented digital platforms, will not see a big bang even if they have adopted AI enabled products from ManageEngine.

“It is about how digitally mature you are; how well rounded is your process streamlining, data streamlining, and automation; that that would have a direct impact on the Roi that your AI is going to give to you,” emphasizes Ramamoorthy.

Large language AI models

By default most of the current AI use cases, offered by IT vendors, emerge from narrow language AI models. The limitation of these models is that they can only do one thing at a time. If you have a model for invoice data extraction, you can only do invoices. If you give it any other document, it will not be able to extract the required data, as it is programmed for invoice data extraction. Small language models are more effective, when the context is narrowed down.

ManageEngine has seen improvements in agent productivity and reducing mean time to respond at the help desk.

“One thing that we realized is bigger models provide more emergent behaviour. The model is able to answer things that it has not seen before. And then there are some use cases where you will need that emergent behaviour,” points out Ramamoorthy.

For reference, small language models require 3 to 5 billion parameters; medium language models require 5 to 20 billion parameters; and large language models operate above 20 billion parameters. ManageEngine plans to customise its large language models to specific domains.

“We have been building our own foundational models and we are tweaking it to a domain. For example, large language model for security, monitoring, service delivery. This is easier because it is all natural language. The only place where we see OpenAI coming in is in service delivery. But even then, when we build our own large language model that will become more contextual. The first party data access is what is going to differentiate us, and we want to play to our strengths,” summarizes Ramamoorthy.

This is part of Explainable AI, which is a set of tools and frameworks to help you understand and interpret predictions made by your machine learning models, that are natively integrated into products and services. This is where ManageEngine is looking at a self-healing and prescriptive approach into integrating AI into IT operations.

What is AIOps?

AIOps is a combination of Artificial Intelligence, AI and machine learning, ML technologies incorporated into the management of IT infrastructure. In 2017, Gartner coined the term AIOps to include platforms that analyse telemetry and events, and identify meaningful patterns that provide insights to support proactive responses. With the help of observed telemetry, AIOps help teams collaborate better, detect issues faster, and resolve them quickly, before the end user is impacted.

Prediction is better than remediation. An AIOps-powered network management software can collect data from multiple IT sources, process that data using AI and ML technologies, and provide solutions for you.

Ultimately, the goal with AIOps platforms is to empower ITOps professionals with the insights they can use to detect issues earlier and resolve them faster.

An AIOps platform operates on a three tier architecture.

In the ingestion phase, all relevant historical and real-time data collected from observability telemetry is analysed for any shortcomings or problematic patterns. The more data that is collected, the more information will be available for better context.

Correlation involves presenting all accumulated data to the relevant departments and analysing how the data is relevant to the entire business. This includes the collaboration of DevOps, SRE, and IT management teams to diagnose the existing issues and prescribe solutions for any future bottlenecks.

Site reliability engineering or SRE is the process of implementing software engineering techniques that automate the infrastructure management process by bringing the development and operations team together. SRE is what happens when you ask a software engineer to design an operations team.

With the collected telemetry and the efforts of the various business teams, a plan is plotted to determine how to act during the remediation process. By automating the majority of remediation and troubleshooting processes, this not only reduces the workload on the IT operations staff but also reduces the possibility of human error and results in faster, more precise solutions overall.

Advanced AI and ML-based algorithms help support a wide range of IT operation processes in a faster, clearer, and less complicated approach. Data gathering and processing is one of the fundamental features of AIOps, so irrelevant data is filtered out and removed before it is used.

Automated threshold configuration for critical performance metrics enables network admins to monitor the performance statistics of various devices closely, even in a constantly changing distributed environment. Adaptive thresholds enable automatic threshold configuration for specific monitors based on how the environment behaves at any given time.

Troubleshooting an issue quickly plays a significant role in reducing the mean time to repair, MTTR and increasing the efficiency of a network, thereby reducing network downtime.

AIOps aggregates the collected data and identifies relationships and causality, providing IT with an overview of what’s at stake. This enables IT operations teams to correlate and interpret information, as needed, to understand and manage issues quickly.

Other related metrics and KPIs such as MTTR, MTTA and MTTF can be obtained, helping to improve event management efforts and the analysis of metrics such as false-positive rates, signal-to-noise ratios, and enhancement statistics. This gives in-depth visibility into the impact caused by the applications on the network and vice versa, and helps pin-point any faults in the network or applications.

Top suspect causes, the root cause of issues, and the path taken by each individual request can all be analysed and tracked with help of a single application. Features such as root-cause analysis and network path analysis help you drill down to the root cause of an issue, collect relevant data, and help remediate it before the end user or client is affected. Once alerted, the IT team is presented with the top suspected causes and evidence leading to AIOps’ conclusions. This results in reduced hours of workforce required for routine troubleshooting.

AIOps shines brightest in areas where human struggle, the analysis of vast amounts of data. This is useful for modern, highly distributed architectures where tens of thousands of instances are running simultaneously. An inventory of reports, both manual and automated allows you to log, analyse, and summarise pragmatic data that is relevant to your network’s health and performance.

AIOps helps automate closed-loop remediation for known issues. AIOps help spin up additional instances of an application to combat slowdowns and quickly remediate any shortcomings. Workflow automation serves network administrators, allowing them to automatically run predefined sets of actions in an agile and flexible drag-n-drop manner.

Use ML powered report forecasting features, the number of days left till system resources like memory, disk space and CPU utilisation is exhausted.

AI-powered chatbots

The impact of AI on service desks will become valued when AI is able to perform the mediocre tasks service desk teams would rather not do, as well as the actions humans struggle with. AI’s capabilities will include smart automations, strategic insights, and predictive analytics.

Take the routing of incoming tickets; typically, it’s a time-consuming manual task. Automation is already at play in some help desks using static rules that categorise each ticket, but these cannot adapt or improve.

This is where machine learning comes into play. Machine learning models can learn from historic service desk data while processing live data to become increasingly more accurate and efficient models.

Similar AI-based models can eliminate the time and effort required of humans for a variety of tasks, from suggesting suitable windows for patch updates to providing insights in change planning and implementation, predicting IT problems, and monitoring for requests that potentially violate service level agreements.

Context-specific chatbots are already aiding technicians in performing simple service desk tasks such as approving requests or adding notes to requests through simple conversations. On the end-user front, similar chatbots are helping end users to find solutions to their simple issues and to track the status of their requests without having to reach out to a technician.

Eventually, it is likely that AI-based virtual service desk assistants will take a bigger load off technicians by becoming the first point of contact for end users contacting the service desk. And they would also go on to perform service desk activities such as creating a request for the end user. Coupled with the machine-learning-based categorisation and assignment, the AI-based service desk can provide a better service delivery experience for the end user.

Each of these AI models will be specific to each service desk because the models are shaped by the service desk data they are based on. That data will be unique to each service desk and AI can only be as effective as the data and knowledge that feeds it.

On top of a well-maintained repository of data, the AI model will also require a properly documented set of resolutions, workarounds, and knowledge articles. Take an AI-based categorisation or prioritisation model, training it will require a historical database of all requests with parameters such as request type, level, impact, urgency, and site.

Provided there is supporting documentation in place, natural language processing, enabled chatbots can be trained to understand the text input from end users and handle a particular category of requests and incidents.

Let us consider print issues as one use-case example. Using narrow AI, which is intelligence that is available now, chatbots can help end users resolve printer issues by suggesting solutions in the order of their success percentage.

Using general AI, which is more effective than narrow AI but could take longer to develop and to put into practice, chatbots could eventually go beyond simply suggesting solutions to issues that have already occurred, instead predicting printer issues before they happen.

For example, machine-learning-based models could automatically create service requests for replacing toner and other supplies before they run out. The chatbot could then cross-reference the requests database to determine if a request had already been created when a user reports the same issue. If that issue has already been reported, the chatbot could relay this information back to the user.

There are multiple other ways chatbots can provide value. From managing remote user requests to adding notes, comments, or annotations to those requests. Importantly, they can remove these tasks from the human workload.

In knowledge management, machine-learning-based models can be trained to identify success rates for each solution based on past performance. This can be achieved by considering ticket reopen rates, article ratings from end users and technicians, and end-user acknowledgment. These models can also be trained to identify incident categories that occur most frequently.

Machine learning models can also deliver insight on which categories need more knowledge from the IT service desk team to build relevant solutions and knowledge base articles to help both end users and technicians.

In service request management, machine learning models and algorithms can be trained to dynamically automate service request workflows based on request history. These machine-learning-based automation models continue to learn with each bit of live data to fine-tune workflows for higher efficiency. In IT change management, AI can minimise the risk of human error by analysing huge volumes of data that people often struggle with.

AI can also help service desk teams in the monitoring and management of hardware and software assets. Machine learning systems can constantly monitor the performance of a configuration item or check the available performance data and predict breakdowns. AI can help flag anomalies and generate critical warnings by connecting the dots across multiple areas, which would be impossible to achieve manually.

AI has huge potential to revolutionise the way IT service desks and service desk teams work, but service desks must undergo preparation if they’re to realise the full benefit. To ensure AI delivers its full potential, service desk teams must properly document all requests, problems, and changes, maintain an accurate service desk database, and build an in-depth knowledge base as ITSM tool vendors begin to integrate AI into their products.